Introduction #

The authors proposed the CLVOS23: A Long Video Object Segmentation Dataset for Continual Learning - new long-video object segmentation dataset for continual learning, as a realistic and significantly greater challenge for testing VOS(Video Object Segmentation) methods on long videos. The frames for dataset were taken from the Long Videos dataset (rat, dressage, blueboy videos) and from the YouTube (car, dog, parkour, skating, skiing, skiing-long videos).

Motivation

The goal of Video Object Segmentation (VOS) is to accurately extract a target object at the pixel level from each frame of a given video. VOS solutions are generally divided into two categories: semi-supervised (or one-shot) VOS, where ground-truth masks of the target objects are provided for at least one frame during inference, and unsupervised VOS, where the model has no prior knowledge about the objects.

In semi-supervised VOS, online approaches update part of the VOS model based on evaluated frames and estimated masks. The idea is that videos contain relevant information beyond just the current frame’s mask, which a model can leverage by learning during the evaluation process. However, online model learning raises questions about how effectively the model adapts from frame to frame, especially when new frames differ significantly from the initial ground-truth frame. This challenge falls under the domain of continual learning, a type of machine learning where a model is trained on a sequence of tasks and is expected to continuously improve its performance on each new task while maintaining its ability to perform well on previously learned tasks.

Current state-of-the-art semi-supervised and online VOS methods excel on short videos, typically a few seconds or up to 100 frames long, as seen in datasets like DAVIS16, DAVIS17, and YouTube-VOS18. However, these methods often struggle to maintain performance on long videos, such as those found in the Long Videos dataset. This issue has not been thoroughly investigated or addressed within the VOS field, particularly through the lens of continual learning.

Continual learning methods are usually evaluated on classification datasets like MNIST, CIFAR10, and ImageNet, or on datasets specifically designed for continual learning, such as Core50. In these scenarios, the classification dataset is presented to the model as a sequential data stream in online continual learning methods. Unlike these datasets and testing scenarios, long video object segmentation has numerous real-world applications, including video summarization, human-computer interaction, and autonomous vehicles, which necessitate robust performance over extended sequences.

Dataset description

In the ideal case, where the samples in a video sequence are independent and identically distributed (i.i.d.), machine learning problems are made significantly easier, since there is then no need to handle distributional drift and temporal dependency in VOS. However, i.i.d. assumption is not valid in video data.

The dataset consisted of three long sequences with a total of 7411 frames. The i.i.d. assumption is invalid for “dressage” videos due to the significant distribution drifts that occur, which align more closely with the non-i.i.d. assumptions of continual learning. This new continual learning-based interpretation of long video sequences is being discussed for the first time in the context of VOS and continual learning. The Long Videos dataset currently selects evaluation label masks uniformly, failing to adequately test how well a VOS solution handles sudden shifts in the target’s appearance. The authors propose an alternative approach: annotating frames for evaluation based on the distribution drifts occurring in each video sequence.

A subset of frames from “dressage” video of the Long Videos dataset. The video consists of 23 sub-chunks that are separated from each other by significant distributional drifts or discontinuities. The lower (sparse) row, in each set, show the annotated frames. The annotations provided by are shown without a border, whereas the annotated masks added via this paper, and made available via the CLVOS23 dataset, are shown with blue borders. The four sub-chunks that are missing from the Long Videos dataset are encircled in red.

Image above shows 23 sub-chunks of videos in the “dressage” video of the Long Videos dataset. Each sub-chunk is separated from its previous and next sub-chunks based on the distribution drifts. When an online or offline event, such as a sports competition, is recorded using multiple cameras, these distribution drifts are common in mediaprovided videos. As a result, in the authors proposed dataset, they first utilize the following strategy to select candidate frames for annotation and evaluation.

- They select the first frame of each sub-chunk S. It is interesting to see how VOS models handle the distribution drift that happens in the sequence, which is arriving a new task in continual learning.

- The last frame of each sequence is also selected. The first frame ground truth label mask is given to the model as it is set in the semi-supervised VOS scenario.

- One frame from the middle of each sub-chunk is also selected for being annotated.

For CLVOS23, in addition to the 3 videos from the Long Videos dataset, the authors added the other 6 videos form YouYube. All frames of the 6 new added videos are extracted with the rate of 15 Frames Per Second (FPS). To ensure that all distribution drifts are captured, the authors only annotate the first frame of each sub-chunk in the Long Videos dataset and add them to the uniformly selected annotated frames. The proposed dataset has following advantages over the Long Videos dataset.

- It added 5951 frames to 7411 frames of the Long Videos dataset.

- CLVOS23 increased the number of annotation frames from 63 in the Long Videos dataset to 284.

- It increases the number of videos from 3 to 9.

- The selected annotated frames are chosen based on the distribution drift that happens in the videos (subchunks) rather than being uniformly selected.

| Video name | #Sub-chunks (tasks) | #Frames | #Annotated frames |

|---|---|---|---|

| dressage | 23 | 3589 | 43 |

| blueboy | 27 | 2406 | 47 |

| rat | 22 | 1416 | 42 |

| car | 18 | 1109 | 37 |

| dog | 12 | 891 | 25 |

| parkour | 24 | 1578 | 49 |

| skating | 5 | 778 | 11 |

| skiing | 5 | 692 | 11 |

| skiing-long | 9 | 903 | 19 |

Each video sequence’s specifications in the proposed CLVOS23 dataset. The first three videos (Dressage, Blueboy, and Rat) are taken directly from the Long Videos dataset and the authors added additional annotated ground-truth frames to each of them to make them more appropriate for continual learning.

It is worth noting that for a long VOS dataset, it is very expensive and sometimes unnecessary to annotate all the frames of videos for evaluation. It is worth mentioning that the authors utilized the Toronto Annotation Suite to annotate the selected frames for evaluation. The frames of new 6 videos were resized to have a height of 480 pixels. The width of each frame is defined as proportionate to its height.

Summary #

CLVOS23: A Long Video Object Segmentation Dataset for Continual Learning is a dataset for semantic segmentation, object detection, and semi supervised learning tasks. It is applicable or relevant across various domains.

The dataset consists of 13362 images with 284 labeled objects belonging to 5 different classes including person, dressage, rat, and other: car and dog.

Images in the CLVOS23 dataset have pixel-level semantic segmentation annotations. There are 13078 (98% of the total) unlabeled images (i.e. without annotations). There are no pre-defined train/val/test splits in the dataset. Alternatively, the dataset could be split into 9 videos names: dressage (3589 images), blueboy (2406 images), parkour boy (1578 images), rat (1416 images), car (1109 images), skiing slalom (903 images), dog (891 images), skating (778 images), and skiing (692 images). The dataset was released in 2023 by the University of Waterloo, Canada.

Explore #

CLVOS23 dataset has 13362 images. Click on one of the examples below or open "Explore" tool anytime you need to view dataset images with annotations. This tool has extended visualization capabilities like zoom, translation, objects table, custom filters and more. Hover the mouse over the images to hide or show annotations.

Class balance #

There are 5 annotation classes in the dataset. Find the general statistics and balances for every class in the table below. Click any row to preview images that have labels of the selected class. Sort by column to find the most rare or prevalent classes.

Class ㅤ | Images ㅤ | Objects ㅤ | Count on image average | Area on image average |

|---|---|---|---|---|

person➔ mask | 137 | 137 | 1 | 8.22% |

dressage➔ mask | 43 | 43 | 1 | 9.77% |

rat➔ mask | 42 | 42 | 1 | 5.56% |

car➔ mask | 37 | 37 | 1 | 13.74% |

dog➔ mask | 25 | 25 | 1 | 3.96% |

Co-occurrence matrix #

Co-occurrence matrix is an extremely valuable tool that shows you the images for every pair of classes: how many images have objects of both classes at the same time. If you click any cell, you will see those images. We added the tooltip with an explanation for every cell for your convenience, just hover the mouse over a cell to preview the description.

Images #

Explore every single image in the dataset with respect to the number of annotations of each class it has. Click a row to preview selected image. Sort by any column to find anomalies and edge cases. Use horizontal scroll if the table has many columns for a large number of classes in the dataset.

Object distribution #

Interactive heatmap chart for every class with object distribution shows how many images are in the dataset with a certain number of objects of a specific class. Users can click cell and see the list of all corresponding images.

Class sizes #

The table below gives various size properties of objects for every class. Click a row to see the image with annotations of the selected class. Sort columns to find classes with the smallest or largest objects or understand the size differences between classes.

Class | Object count | Avg area | Max area | Min area | Min height | Min height | Max height | Max height | Avg height | Avg height | Min width | Min width | Max width | Max width |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

person mask | 137 | 8.21% | 31.31% | 0.08% | 25px | 5.21% | 480px | 100% | 273.04px | 56.88% | 31px | 3.63% | 567px | 66.47% |

dressage mask | 43 | 9.77% | 75.5% | 0.35% | 38px | 10.56% | 360px | 100% | 194.02px | 53.9% | 23px | 4.79% | 428px | 89.17% |

rat mask | 42 | 5.55% | 25.89% | 0.12% | 41px | 8.54% | 420px | 87.5% | 174.81px | 36.42% | 27px | 2.81% | 471px | 49.06% |

car mask | 37 | 13.74% | 81.51% | 0.12% | 17px | 3.54% | 399px | 83.12% | 129.32px | 26.94% | 39px | 4.57% | 853px | 100% |

dog mask | 25 | 3.96% | 24.58% | 0.1% | 29px | 6.04% | 406px | 84.58% | 147.84px | 30.8% | 17px | 1.99% | 573px | 67.17% |

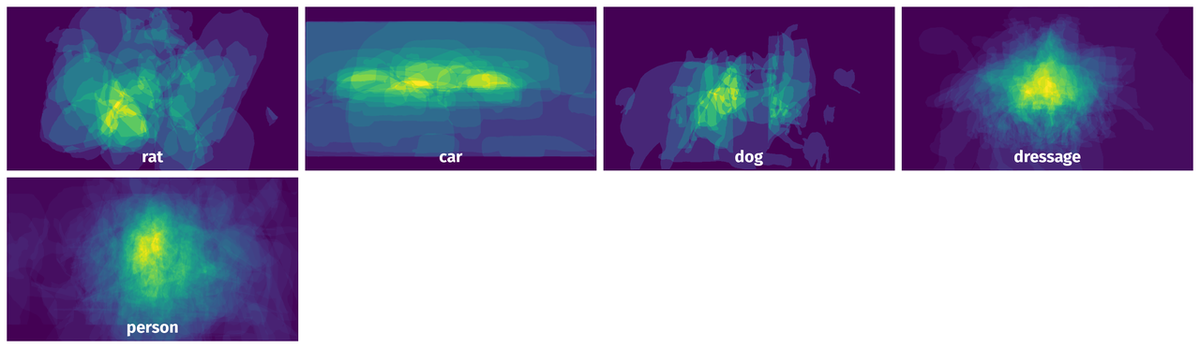

Spatial Heatmap #

The heatmaps below give the spatial distributions of all objects for every class. These visualizations provide insights into the most probable and rare object locations on the image. It helps analyze objects' placements in a dataset.

Objects #

Table contains all 284 objects. Click a row to preview an image with annotations, and use search or pagination to navigate. Sort columns to find outliers in the dataset.

Object ID ㅤ | Class ㅤ | Image name click row to open | Image size height x width | Height ㅤ | Height ㅤ | Width ㅤ | Width ㅤ | Area ㅤ |

|---|---|---|---|---|---|---|---|---|

1➔ | rat mask | rat_05559.jpg | 480 x 960 | 167px | 34.79% | 251px | 26.15% | 6.65% |

2➔ | person mask | parkour_boy_0085.jpg | 480 x 853 | 164px | 34.17% | 97px | 11.37% | 1.66% |

3➔ | rat mask | rat_01746.jpg | 480 x 960 | 95px | 19.79% | 63px | 6.56% | 0.99% |

4➔ | person mask | skiing_0546.jpg | 480 x 853 | 42px | 8.75% | 41px | 4.81% | 0.18% |

5➔ | dressage mask | dressage_12255.jpg | 360 x 480 | 114px | 31.67% | 81px | 16.88% | 2.33% |

6➔ | rat mask | rat_04902.jpg | 480 x 960 | 198px | 41.25% | 323px | 33.65% | 8.66% |

7➔ | person mask | skiing_slalom_0617.jpg | 480 x 853 | 82px | 17.08% | 112px | 13.13% | 0.83% |

8➔ | person mask | blueboy_00909.jpg | 480 x 853 | 480px | 100% | 332px | 38.92% | 24.19% |

9➔ | car mask | car_0090.jpg | 480 x 853 | 61px | 12.71% | 98px | 11.49% | 1.2% |

10➔ | person mask | blueboy_01188.jpg | 480 x 853 | 406px | 84.58% | 203px | 23.8% | 13.32% |

License #

CLVOS23: A Long Video Object Segmentation Dataset for Continual Learning is under CC BY-NC-SA 4.0 license.

Citation #

If you make use of the CLVOS23 data, please cite the following reference:

@dataset{CLVOS23,

author={Amir Nazemi and Zeyad Moustafa and Paul Fieguth},

title={CLVOS23: A Long Video Object Segmentation Dataset for Continual Learning},

year={2023},

url={https://github.com/Amir4g/CLVOS23}

}

If you are happy with Dataset Ninja and use provided visualizations and tools in your work, please cite us:

@misc{ visualization-tools-for-clvos23-dataset,

title = { Visualization Tools for CLVOS23 Dataset },

type = { Computer Vision Tools },

author = { Dataset Ninja },

howpublished = { \url{ https://datasetninja.com/clvos23 } },

url = { https://datasetninja.com/clvos23 },

journal = { Dataset Ninja },

publisher = { Dataset Ninja },

year = { 2026 },

month = { jan },

note = { visited on 2026-01-15 },

}Download #

Dataset CLVOS23 can be downloaded in Supervisely format:

As an alternative, it can be downloaded with dataset-tools package:

pip install --upgrade dataset-tools

… using following python code:

import dataset_tools as dtools

dtools.download(dataset='CLVOS23', dst_dir='~/dataset-ninja/')

Make sure not to overlook the python code example available on the Supervisely Developer Portal. It will give you a clear idea of how to effortlessly work with the downloaded dataset.

The data in original format can be downloaded here.

Disclaimer #

Our gal from the legal dep told us we need to post this:

Dataset Ninja provides visualizations and statistics for some datasets that can be found online and can be downloaded by general audience. Dataset Ninja is not a dataset hosting platform and can only be used for informational purposes. The platform does not claim any rights for the original content, including images, videos, annotations and descriptions. Joint publishing is prohibited.

You take full responsibility when you use datasets presented at Dataset Ninja, as well as other information, including visualizations and statistics we provide. You are in charge of compliance with any dataset license and all other permissions. You are required to navigate datasets homepage and make sure that you can use it. In case of any questions, get in touch with us at hello@datasetninja.com.